ACM MM 2024

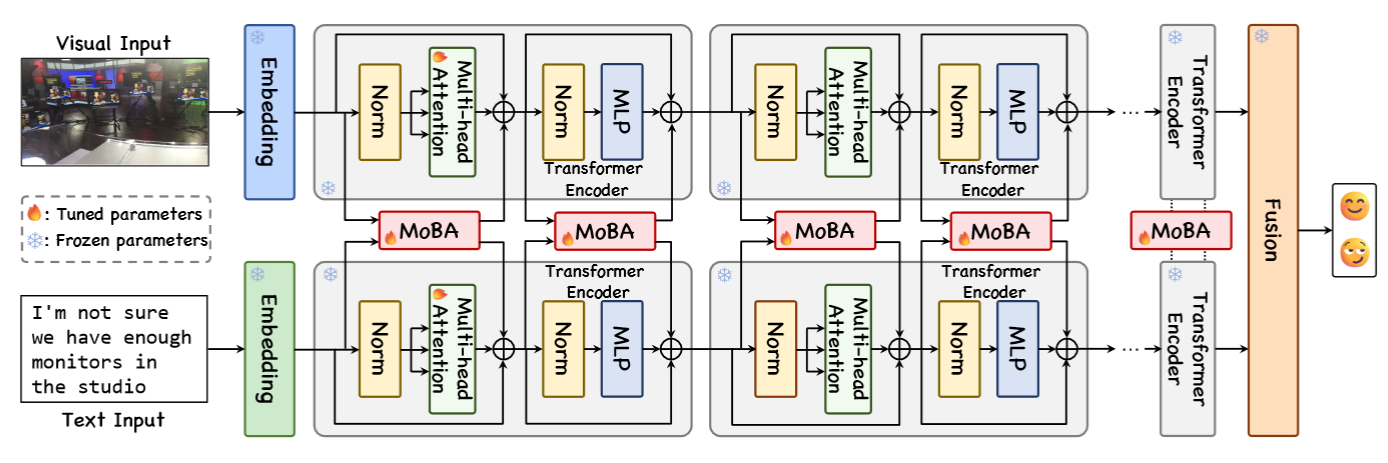

Here we present a novel approach that combines low-rank and MoE to enhance performance while preserving efficiency under dynamic intermodal interactions.

Here we present a novel approach that combines low-rank and MoE to enhance performance while preserving efficiency under dynamic intermodal interactions.

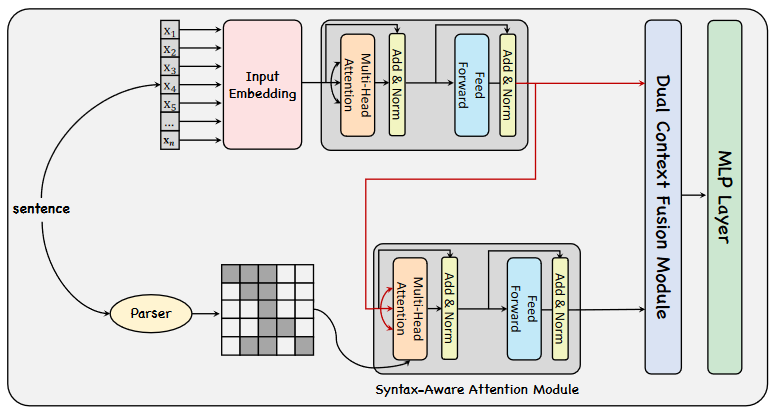

Here we consider syntax matter in SLU, improve it by injecting syntactic information into the attention module, and explore the fusion methods between the original module and the one with syntactic information.

|

Yifeng Xie, Zhihong Zhu, Xin Chen, Zhanpeng Chen, Zhiqi Huang (ACM MM 2024) [PDF] [OpenReview] [Code] |

|

InfoEnh: Towards Multimodal Sentiment Analysis via Information

Bottleneck Filter and Optimal Transport Alignment

Yifeng Xie, Zhihong Zhu, Xuan Lu, Zhiqi Huang, Haoran Xiong Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LERC-COLING 2024) [PDF] [Link] |

|

Yifeng Xie, Zhihong Zhu, Xuxin Cheng, Zhiqi Huang, Dongsheng Chen Findings of the Association for Computational Linguistics: EMNLP 2023 (EMNLP Findings 2023) [PDF] [Link] |